How to use R, a Tableau connection to R and Calculated Fields to store and load the underlying data of a defined Tableau View in a Database or Text File (part 2 of 2)

This is the second part of Michael Martin’s guest post on how to use Tableau and R to read and write the underlying data of a Tableau view from / to an ODBC database.

This is the second part of Michael Martin’s guest post on how to use Tableau and R to read and write the underlying data of a Tableau view from / to an ODBC database.

Michael is the Managing Partner at Business Information Arts, Inc, Tableau Partner, Tableau Certified Consultant, Tableau Instructor and leader of the Toronto Tableau User Group. Last, but not least, Michael is the only person I know who is using Tableau Software since version 1.0.

NOTE: You may need to zoom into some of the screen shots below for legibility reasons. Also, the text below contains references to the “Level of Detail Shelf“. This is synonymous with the Detail Pill in the Tableau Marks Card within the Tableau 9.x and 10.x user interface. As mentioned in the introduction to part one of this post, including a ‘$’ character as part of an RODBC object name (for example crs$odbc, crs$odbc.tables, crs$dataset) could cause an error on your system, and the affected calculation will fail. If this happens, replace instances of $ within an RODBC object name with another character within the affected calculation, such as an underscore – i.e. _.

Supporting files and a README file are available for download at the end of this part of the post. Please look at the README file first.

The author would like to thank Leonid Koyfman for his suggestions.

The first part of this post walked you through the process of

- downloading and installing R and RStudio

- installing the required R packages,

- setting up the ODBC database connection

- writing the Calculated Fields in Tableau to read the underlying data of a Tableau view from a database

In the first part, we have only run SELECT queries, but we could also run INSERT, DELETE, UPDATE or INSERT INTO (Append data) queries – all we need to be is pass a valid SQL statement to a Calculated Field that establishes an ODBC connection to the Database and run the SQL statement.

Today’s second part of the post will

- show how to insert data of a Tableau view into a database

- demonstrate the necessity of aligning the orientation of the view and the Table Calculation

- show how to perform data definition queries

- provide the general caveats of the technique

- demonstrate an analysis technique that leverages multiple exports of a Tableau View based on the Superstore Dataset to SQL Server

- give some insights on performance and upper limits

- provide some useful links regarding the integration of Tableau and R

Insert Data of a Tableau View into a Database

Our next example INSERTS data in a Tableau view into a table in the database.

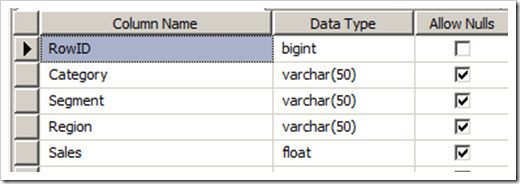

Within the database with the Superstore Data, create a new table called Data_From_Tableau with the following column definitions:

The RowID column is optional and should be defined as an IDENTITY column in SQL Server that gives each row a unique number at load time. This will be the destination table for an INSERT statement that runs within a Tableau Calculated Field.

Create a new sheet in Tableau – connected to the Superstore Orders Table in your Database.

Define a Calculated Field named CONVERT Header and set it to:

‘CONVERT(float,’

We’ll see why we need this Calculation shortly.

Create another Calculation named SQLINSERTStatement

‘INSERT INTO Data_From_Tableau ([Category],[Segment],[Region],[Sales]) VALUES (‘ +

“‘” + ATTR([Category]) + “‘” + ‘,’ + “‘” + ATTR([Segment]) + “‘” + ‘,’ + “‘” + ATTR([Region]) + “‘” + ‘,’ + [CONVERT Header] + STR(SUM([Sales])) + ‘)’ + ‘)’

Now create a Calculated Field named Insert INTO Data from Tableau

SCRIPT_STR(“

library(RODBC)

crs$odbc <- odbcConnect(‘Tableau_Superstore_Orders’, believeNRows=TRUE)

crs$odbc.tables <- sqlTables(crs$odbc)$TABLE_NAME

crs$dataset <- sqlQuery(crs$odbc,.arg1)

close(crs$odbc)

‘Check Your Database to see Inserted Rows’”

,[SQLINSERTStatement]

)

The pattern is familiar. An ODBC connection is established and a query is run. This time, we run an INSERT query to insert data in the database that Tableau has “materialized” in the view.

As the SQLINSERTStatement Calculation needs to be a valid string from a Tableau point of view, we cannot include the literal text of SUM(Sales)) in the Calculation as the Calculation would no longer evaluate to a text string because Sales is a numeric field. The other values to be inserted (Category, Segment, and Region) are string values. However, the destination field in the database for Sales is numeric (float). To get around this, and since in my case, the destination database is SQL Server, I use a call to the CONVERT function to convert the call to the Tableau STR function to a float.

Using the CONVERT Header “sub-calculation” within the SQLINSERTStatement Calculation results in Tableau passing the call to the CONVERT function to the database (SQL Server in this case) in the correct format.

If the SQLINSERTStatement Calculation was:

‘INSERT INTO Data_From_Tableau ([Category],[Segment],[Region],[Sales]) VALUES (‘ +

“‘” + ATTR([Category]) + “‘” + ‘,’ + “‘” + ATTR([Segment]) + “‘” + ‘,’ + “‘” + ATTR([Region]) + “‘” + ‘,’ + “‘” + ‘CONVERT(float,’ + STR(SUM([Sales])) + ‘)’ + ‘)’

At run time, the calculation would evaluate to:

INSERT INTO Data_From_Tableau ([Category],[Segment],[Region],[Sales])

VALUES (‘Furniture’,’Consumer’,’East’,

‘CONVERT(float,114211.80200000001))

and the INSERT would fail because of the quote before the word CONVERT as there is no SQL Server function named ‘CONVERT.

Using the Convert Header calculation within the INSERT statement leads to a different (and better) result at run time:

INSERT INTO Data_From_Tableau ([Category],[Segment],[Region],[Sales])

VALUES (‘Furniture’,’Consumer’,’Central’,

CONVERT(float,86229.218999999968))

Note the absence of a quote before the word CONVERT. This makes all the difference to the database at runtime as the word CONVERT is passed directly to (in my case SQL Server) to run without the preceding quotation mark. Since CONVERT is a recognized T-SQL function, it works as expected. With a Microsoft Access database, we would have used the CDBL Function. Like SQL Server, Oracle uses a CONVERT function to convert values into different datatypes.

NOTE: Some database platforms will coerce a STRING value to a NUMERIC one when INSERTING a new row of data (i.e. perform the data conversion). In this case, you would not need to define and use the CONVERT Header calculation referred to above.

Thanks to Leonid Koyfman for suggesting an alternate and simpler version of the SQLINSERTStatement Calculation below:

‘INSERT INTO Data_From_Tableau ([Category],[Segment],[Region],[Sales]) VALUES (‘ + “‘” + ATTR([Category]) + “‘” + ‘,’ + “‘” + ATTR([Segment]) + “‘” + ‘,’ + “‘” + ATTR([Region]) + “‘” + ‘,’ + “1.0*” + STR(SUM([Sales])) + ‘)’

In the code above, the value of 1.0 is multiplied by STR(SUM(Sales)). This technique will perform the conversion from STRING to FLOAT calculation against a SQL Server back end – without having to use the CONVERT HEADER “sub-calculation” referred to previously, which simplifies the calculation. Hopefully, this technique will also work with your database server.

Turn auto auto-updates off in the new sheet you recently created.

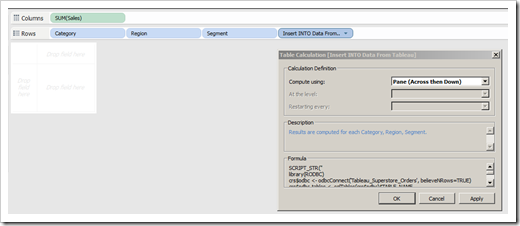

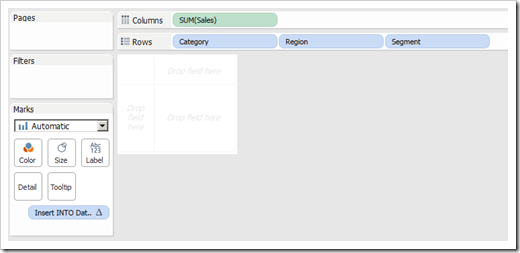

Then lay out the new sheet as shown below:

Remember that calculations that use R are Table Calculations in Tableau. Configure the Insert INTO Data From Tableau Table Calculation to run Pane (Across then Down) .

You could also put the Table Calculation in the Level of Detail Shelf as shown below:

Confirm that the Table Calculation is set to run Pane (Across then Down) – which matches the way the view is laid out.

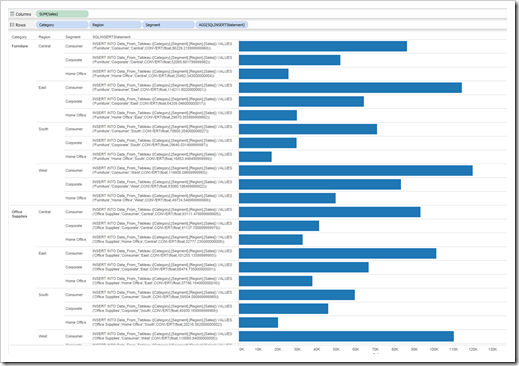

Now do a manual update of the sheet (F9). After a few seconds (assuming that the Insert INTO Data from Tableau calculation is in the Level of Detail Shelf), your sheet will look like:

We can see how Tableau evaluated the SQL INSERT Statement at run time. This is useful for double checking your SQL statement syntax.

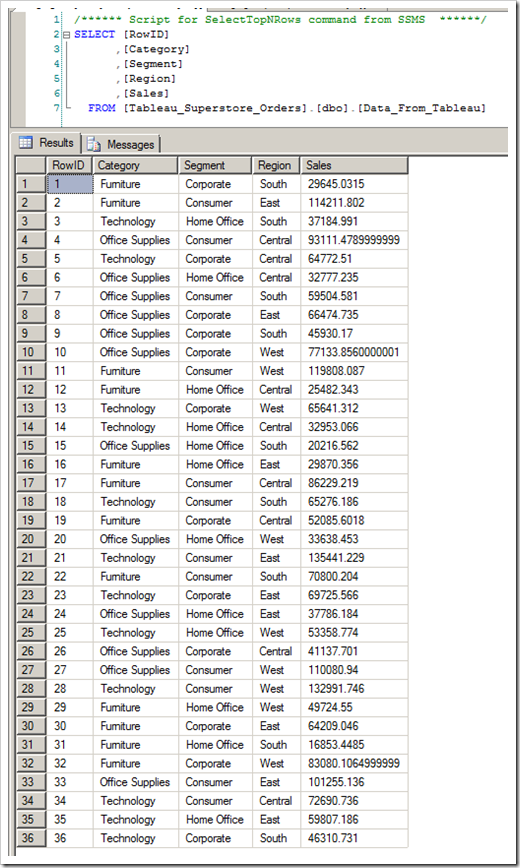

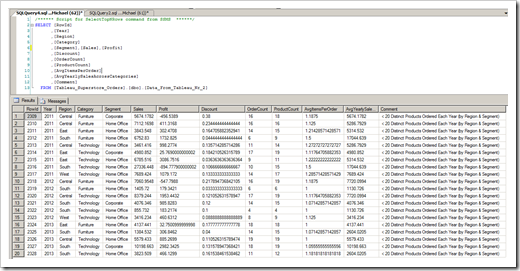

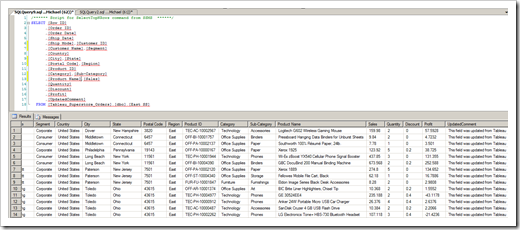

If you go to your database and query the Table you just inserted rows into, you should see:

This is the data that Tableau aggregated and “materialised” in the view.

You can make changes to the view (for example, filtering) and if you update the sheet manually, the updated data will be appended to the Data_From_Tableau Table in the Database.

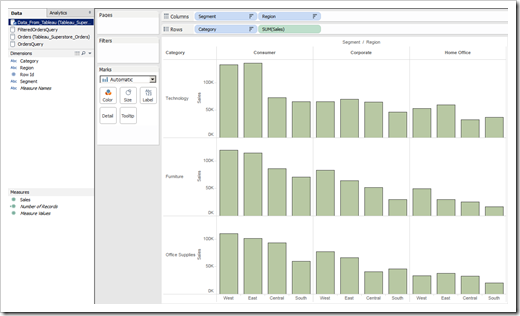

If you connect to the Data_From_Tableau table in Tableau, you can make a visualisation based on the data you inserted as shown below:

Insert Filtered Tableau Views into a Database

Let’s look at another example, which takes what we have done so far a step further.

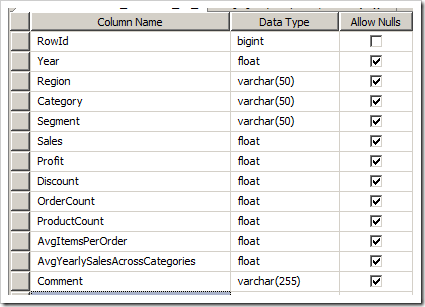

To start, create a second table named Data_From_Tableau_Nr_2 with the structure below in your database:

Once again, the RowID column is optional and should be defined as an IDENTITY column in SQL Server that gives each row a unique number at load time. This will be the destination table for an INSERT statement that runs within a Tableau Calculated Field.

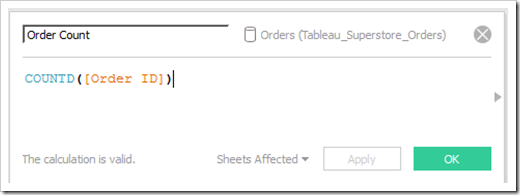

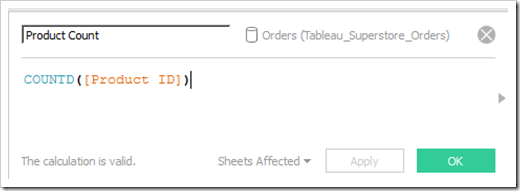

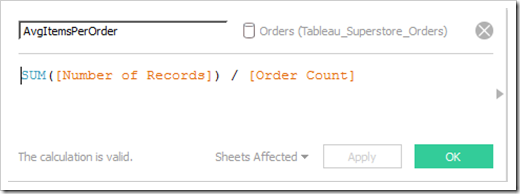

In a new sheet, connected to the Superstore Orders Data in the back end Database, create the following calculations:

We then define Calculated Field named SQLINSERTStatementNr2:

‘INSERT INTO Data_From_Tableau_Nr_2 ([Year],[Region],[Category],[Segment],[Sales],[Profit],[Discount],

[OrderCount],[ProductCount],[AvgItemsPerOrder],

[AvgYearlySalesAcrossCategories],[Comment]) VALUES (‘ +

“‘” + STR(AVG(YEAR([Order Date]))) + “‘” + ‘,’ + “‘” + ATTR([Region]) + “‘” + ‘,’

+ “‘” + ATTR([Category]) + “‘” + ‘,’ + “‘” + ATTR([Segment]) + “‘” + ‘,’

+ [CONVERT Header] + STR(SUM([Sales])) + ‘)’ + ‘,’

+ [CONVERT Header] + STR(SUM([Profit])) + ‘)’ + ‘,’

+ [CONVERT Header] + STR(AVG([Discount])) + ‘)’ + ‘,’

+ [CONVERT Header] + STR([OrderCount]) + ‘)’ + ‘,’

+ [CONVERT Header] + STR([ProductCount]) + ‘)’ + ‘,’

+ [CONVERT Header] + STR([AvgItemsPerOrder]) + ‘)’ + ‘,’

+ [CONVERT Header] + STR([AvgYearlySales]) + ‘)’ + ‘,’

+ “‘” + [Comment] + “‘” + ‘)’

This is a more complex version of the INSERT query we wrote before. As the saying goes, “The devil is in the details”.

Alternatively, you could use the methodology suggested by Leonid Koyfman discussed earlier and write the calculation as:

‘INSERT INTO Data_From_Tableau_Nr_2 ([Year],[Region],[Category],[Segment],[Sales],[Profit],[Discount],

[OrderCount],[ProductCount],[AvgItemsPerOrder],

[AvgYearlySalesAcrossCategories],[Comment]) VALUES (‘ +

“‘” + STR(AVG(YEAR([Order Date]))) + “‘” + ‘,’ + “‘” + ATTR([Region]) + “‘” + ‘,’

+ “‘” + ATTR([Category]) + “‘” + ‘,’ + “‘” + ATTR([Segment]) + “‘” + ‘,’

+ “1.0*” + STR(SUM([Sales])) + ‘,’

+ “1.0*” + STR(SUM([Profit])) + ‘,’

+ “1.0*” + STR(AVG([Discount])) + ‘,’

+ “1.0*” + STR([OrderCount]) + ‘,’

+ “1.0*” + STR([ProductCount]) + ‘,’

+ “1.0*” + STR([AvgItemsPerOrder]) + ‘,’

+ “1.0*” + STR([AvgYearlySales]) + ‘,’

+ “‘” + [Comment] + “‘” + ‘)’

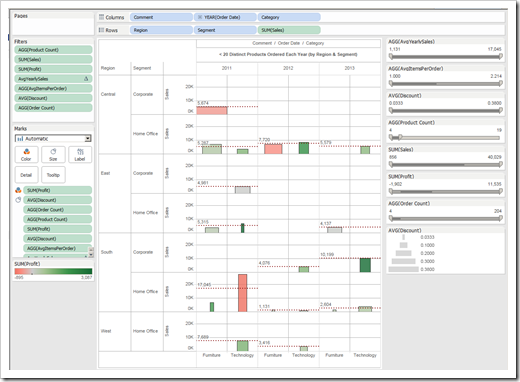

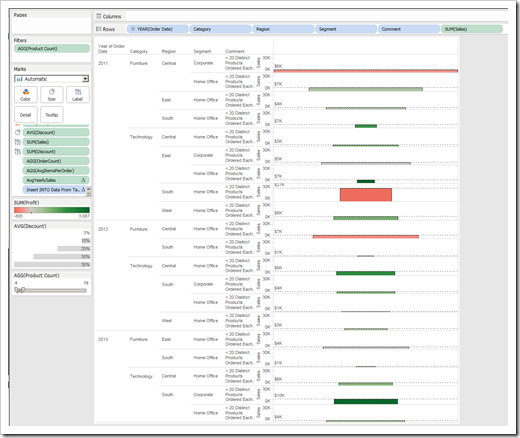

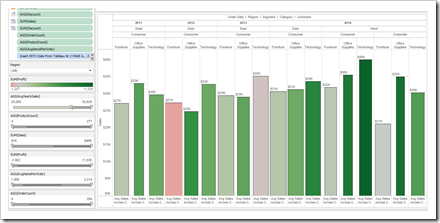

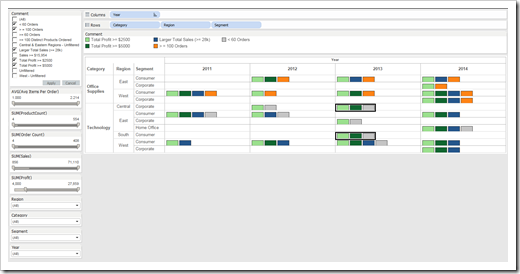

With a connection to the Superstore Orders table, build a view like the one below.

Note that many fields are set as filters. Within each region and customer segment, the view is showing in which categories fewer than 20 distinct products were ordered in a given year in a given region within a given customer segment. The sales metric is sized by profit, and the bars are sized by discount. Note that the aggregation for discount needs to be set to average.

The following fields are in the Level of Detail Shelf:

- Order Count

- Product Count

- Profit

- Discount

- AvgItemsPerOrder

- AvgYearlySales

Note that I have configured a Reference Line to show average sales as follows:

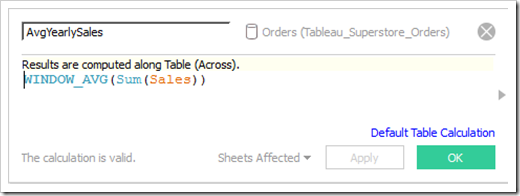

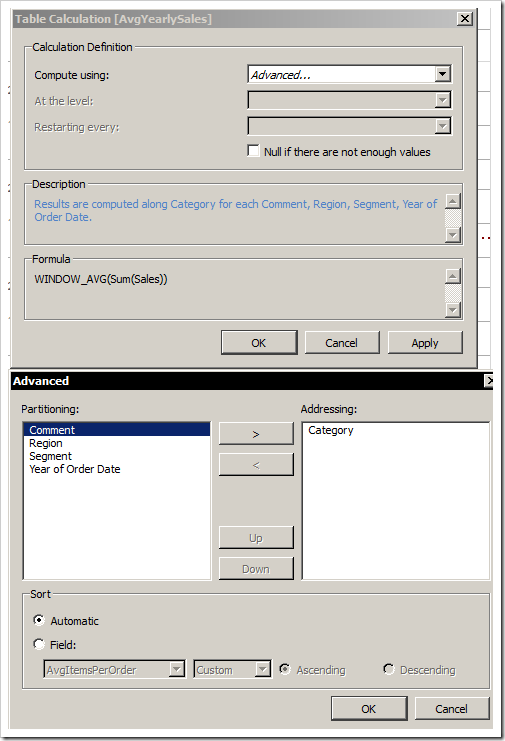

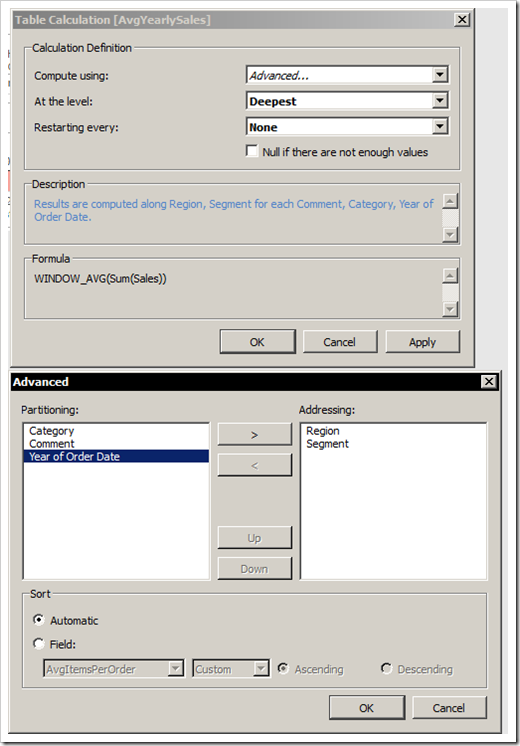

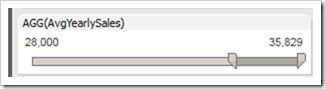

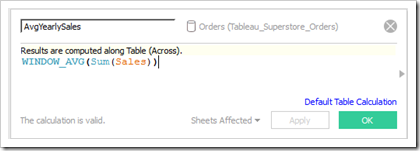

In the view above, the AvgYearlySales Table Calculation needs to be configured to run as follows:

The Table Calculation now performs the same calculation as the Reference Line I placed in the view.

If we want to INSERT a field or calculation into the database, it needs to be either in the view or in the Level of Detail Shelf. We can then include the field or calculation in the INSERT statement.

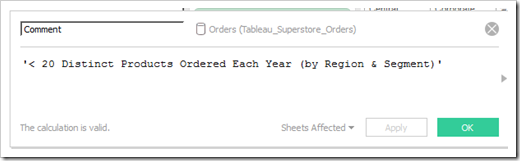

I have also created a Calculated Field named Comment:

The Comment describes the how the view is currently filtered. When the data is inserted into the database, I’m reminded of the profile of the data that was inserted.

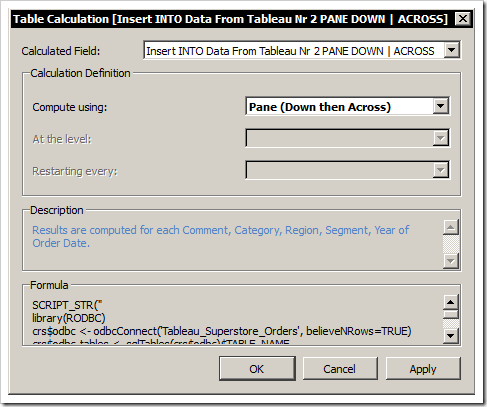

We need to define one last Calculated Field (Insert INTO Data From Tableau Nr 2 PANE ACROSS | DOWN) to run the INSERT query:

SCRIPT_STR(“

library(RODBC)

crs$odbc <- odbcConnect(‘Tableau_Superstore_Orders’, believeNRows=TRUE)

crs$odbc.tables <- sqlTables(crs$odbc)$TABLE_NAME

crs$dataset <- sqlQuery(crs$odbc,.arg1)

close(crs$odbc)

‘INSERT Query Ran – Connect to Data If Not Already Connected’”

,[SQLINSERTStatementNr2]

)

This calculation needs to be either in the view or in the Level of Detail Shelf in order to be run by updating the worksheet – but we’re not going to use it just yet – so don’t put the calculation in the view or in the Level of Detail Shelf – for now.

We can use the current view to explore the Data, find patterns of interest, tag these patterns using the Comment calculation (which means you need to edit the Comment Calculated Field so that your filtering is correctly described), and then INSERT the Data in the view (that Tableau “materialises” for us) into the database.

Alignment of the Orientation of the View and the Table Calculation

However, there’s a catch. If we don’t lay out the view a certain way, Tableau will not INSERT all of the data in the view, no matter how we configure calculation Insert INTO Data From Tableau Nr 2 PANE ACROSS | DOWN to run. Only some partitions of data – but not all of the data, will be inserted.

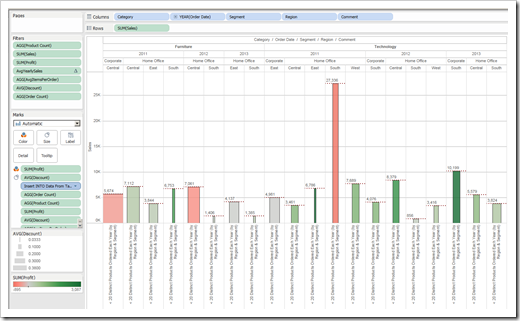

Fortunately, there is a work-around. If I create a new worksheet and lay out the same view as shown below, the SQL INSERT will work as expected.

The view is laid out in a “wide” or “horizontal” orientation. Because of this, you need to confirm that the Table Calculation Insert INTO Data From Tableau Nr 2 PANE ACROSS | DOWN is configured to run as shown below:

This Calculation can be put in the Level of Detail Shelf – but before putting this Calculation in the Level of Detail Shelf, disable automatic updates to the worksheet. The configuration of the Table Calculation now matches the orientation of the view.

Also, the AvgYearlySales Table Calculation needs to be configured as shown below to run correctly in this view:

If you put the Calculation Insert INTO Data From Tableau Nr 2 PANE ACROSS | DOWN in the Level of Detail Shelf and manually update the view, the Insert INTO Data From Tableau Nr 2 PANE ACROSS | DOWN Calculation will run. If you go to the database, the data (in this case 20 rows) meeting the filtering condition should have been inserted:

These are the rows with less than 20 distinct products ordered by region, category, and customer segment within a single year.

Alternatively, you could lay out the data in the view with a “vertical” orientation, as shown below:

In the view above I use a copy of the Calculated Field Insert INTO Data From Tableau Nr 2 PANE ACROSS | DOWN which I have named Insert INTO Data From Tableau Nr 2 PANE DOWN | ACROSS and configured it as shown below:

Running the Table Calculation Pane (Down then Across) matches the orientation of the view.

Data Definition Queries

You can also run Data Definition (DDL) Queries within a Calculated Field. For example you could put the following SQL commands that DROP and then RECREATE table into a Calculated Field named DDL_Queries:

“DROP TABLE Superstore_Sales_WEST;DROP TABLE Superstore_Sales_SOUTH;DROP TABLE Superstore_Sales_CENTRAL;DROP TABLE Superstore_Sales_EAST;” +

“SELECT * INTO Superstore_Sales_WEST FROM Orders WHERE Region=” + “‘” + “West” + “‘” + ‘;’ +

“SELECT * INTO Superstore_Sales_SOUTH FROM Orders WHERE Region=” + “‘” + “South” + “‘” + ‘;’ +

“SELECT * INTO Superstore_Sales_CENTRAL FROM Orders WHERE Region=” + “‘” + “Central” + “‘” + ‘;’ +

“SELECT * INTO Superstore_Sales_EAST FROM Orders WHERE Region=” + “‘” + “East” + “‘”

Note the semicolon delimiter between the individual SQL commands. Note that a delimiter is not need after the last DDL statement for orders in the East as this is the last command in the batch of commands.

Note: Thanks again to Leonid Koyfman for an alternate and simpler version of this calculation:

“

DROP TABLE Superstore_Sales_WEST;

DROP TABLE Superstore_Sales_SOUTH;

DROP TABLE Superstore_Sales_CENTRAL;

DROP TABLE Superstore_Sales_EAST;

SELECT * INTO Superstore_Sales_WEST FROM Orders WHERE Region=’West’;

SELECT * INTO Superstore_Sales_SOUTH FROM Orders WHERE Region=’South’;

SELECT * INTO Superstore_Sales_CENTRAL FROM Orders WHERE Region=’Central’;

SELECT * INTO Superstore_Sales_EAST FROM Orders WHERE Region=’East’

“

You could then wrap this Calculation within a Wrapper Calculation named Run_DDL_Queries that would run the SQL DDL Code:

SCRIPT_STR(“

library(RODBC)

crs$odbc <- odbcConnect(‘Tableau_Superstore_Orders’, believeNRows=TRUE)

crs$odbc.tables <- sqlTables(crs$odbc)$TABLE_NAME

crs$dataset <- sqlQuery(crs$odbc,.arg1)

close(crs$odbc)

‘DDL Queries Ran – Check Database Structure’”

,[DDL_Queries]

)

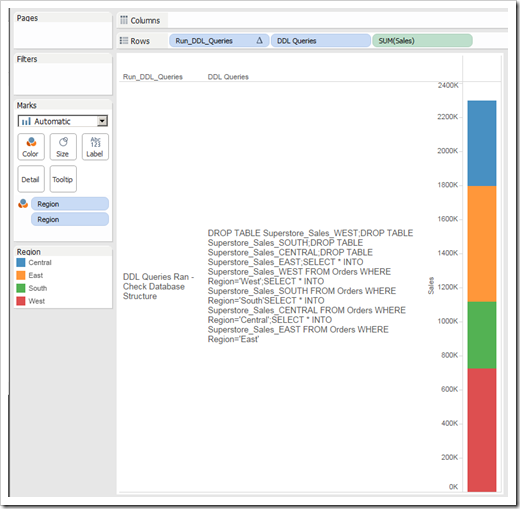

To run the DDL queries below, you could set up a View that looks like:

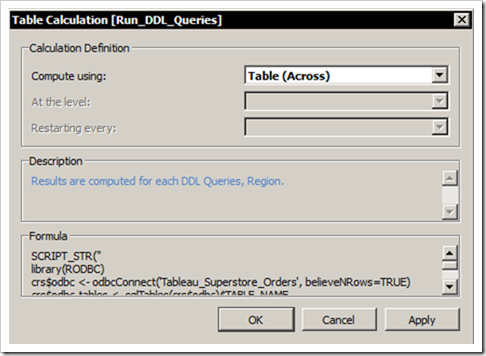

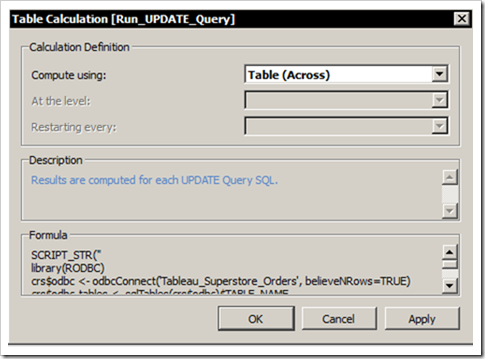

The Run_DDL_Queries Table Calculation needs to be set to run Table Across as shown below given the “horizontal” set up of the view:

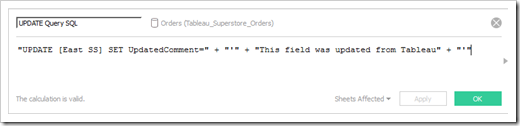

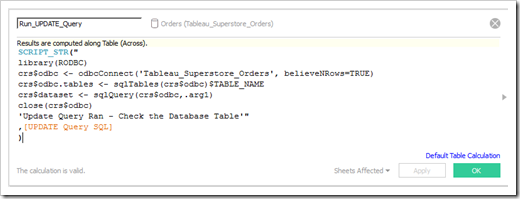

You can also run UPDATE queries within a Wrapper Calculation. As a hypothetical example, if you wanted to UPDATE the contents of a column named UpdatedCommnt in a table named [East SS], you would write a calculation like the one immediately below named UPDATE Query SQL. You could then define a “wrapper calculation” named Run_Update_Query that would run the UPDATE Query SQL calculation as shown below.

Leonid Koyfman has suggested a simpler version of the UPDATE Query SQL calculation:

“UPDATE [East SS] SET UpdatedComment=’This field was updated from Tableau’”

The Run_UPDATE_Query calculation above should be configured to run Table (Across) as shown below:

Assuming there was a table named [East SS] in your database, opening that table after running the Run_UPDATE_Query calculation would show that the UPDATE command worked as expected.

Of course, this was the simplest possible UPDATE query, but you can define and run UPDATE statements that JOIN tables, reference calculations in your Tableau workbook, and include WHERE and HAVING clauses

General Caveats and Considerations

- Based on the author’s experience, it appears that you can’t mix query types within an SQL String that runs within a Wrapper Table Calculation. In other words, trying to run an INSERT query and a DDL Query within the same Wrapper Calculation will fail. On the other hand, running multiple INSERT or DDL Queries within the same Wrapper Calculation works. The author has no theory as to why this is.

- Filtering Views by a Table Calculation (such as AvgYearlySales) causes the SQL Statement within the Wrapper Calculation to run incorrectly. In the example below, we are filtering for Average Yearly Sales of at least $28k. If we were to try and INSERT these records into the Database, the filtering shown below would not be properly applied.

The definition of AvgYearlySales is:

The Calculation above uses the WINDOW_AVG Table Calculation function. Filtering views using Table Calculations is out of scope in this post, but there many interesting Tableau Forum posts that discuss this topic. For example, see: http://www.tableau.com/about/blog/2012/9/jedi-filter-and-table-calc-trick-19004 - Filtering a view by field values (dimensions or measures), or normal Calculations, as we have done in the examples we have covered, works fine.

- Obviously, all SQL commands you write must be composed using the SQL implementation your database expects, and must be supported by the RODBC package we have called in our Calculations. For more information about the RODBC package, check out https://cran.r-project.org/web/packages/RODBC/RODBC.pdf.The examples in this post are aimed at an instance of Microsoft SQL Server.

- RODBC and your Calculation that manipulates the database do not return error messages in case the Calculation fails – your Calculation will fail silently. Therefore, the author recommends that you place the Calculation containing your SQL Code either in the view or in a tooltip, so that you can see how Tableau evaluated your SQL command at run time. You can then examine it and (hopefully) see where things went wrong and make corrections.

- The Superstore dataset that you use may be different from the Superstore dataset this writer has used as Tableau periodically makes changes to this dataset. Therefore row filtering may result in results that do not align to the screen shots used in this post.

- The examples in this post that INSERT data into database tables assume your Tableau connection to the destination table is live (i.e. not a Tableau extract). To see the results of INSERT queries in Tableau connected to a live data source, refresh the data source. If your connection to a given destination table is through an extract, refresh the extract.

- If you run UPDATE or DDL queries against tables you are connected to via a live connection, refresh the data source to see the results of your queries. If the connection is through an extract (assuming you did not add one or more columns to a table the extract is based on), refresh the extract. If a DDL query added one or more columns to a table materialised within an extract, you’ll need to remove the extract and delete the extract file, refresh the (now live) data source, and then re-create the extract to ensure that new columns are available to you in Tableau.

- You need to take care that each SQL statement in the Wrapper Calculation that runs the SQL statement runs only when you want it to. The best way to do this is:

- Build a view that you use to explore your data. Proceed to step b when you have a view you would like to save in your back end database.

- Build another view that applies the same filtering and uses the same Calculations as the view referred to immediately above, but lay out the view either horizontally or vertically as per the examples in the post. Once you have built this sheet, you can re-use it.

- Place the data fields you want to be INSERTED into the back end database on the Rows or Columns Shelf, or in the Level of Detail Shelf. In the examples we have seen, the sales measure was in the view, other calculations and fields were placed in the Level of Detail Shelf.

- Edit the value of the Comment Calculated Field so that it accurately describes the Data View you created in step a, and put the Comment Field in the view or in the Level of Detail Shelf.

- Create / modify the Calculations needed (SQL String and Wrapper Calculation to run the SQL string) to execute the SQL statement you have defined.

- Bring the Calculation that defines the SQL statement into the view (on the Rows or Columns Shelf) and put it in a Tooltip so that you can easily see how Tableau evaluates the SQL statement.

- After you have laid out the view, turn auto updating off in this worksheet.

- Drag the Wrapper calculation that runs the SQL statement you defined to the Level of Detail Shelf in Tableau. As this is a Table Calculation, make sure it is configured to run correctly: Pane Across (and then Down) or Pane Down (and then Across) depending on how out laid out the View as per point b above.

- Double check that you have done the previous steps.

- Manually update the worksheet, which runs the Calculation containing the SQL statement.

- Drag the Wrapper Calculation that runs the SQL statement out of the Level of Detail shelf at this point. This prevents the SQL statement from being run again until you want to run it – otherwise the SQL statement will be run every time the worksheet is updated.

- Turn auto updating for the worksheet back on again. You can now make changes to this sheet and see the updates right away, without running the last defined SQL statement

- In another sheet, connect to the table(s) in your back end database affected by the last SQL statement that ran and confirm that your SQL command worked as expected and you see the data you expect to see in the destination table in the back end database. If your SQL query didn’t work as expected, double check the SQL syntax within the Calculation that defines the SQL statement(s) and run the query again by manually updating the worksheet.

- Return to the first step and build another view.

What for?

Having covered all of this ground, one might ask “what’s the point? Why bother going through all this just to move data from one place to another – especially as the data we see in Tableau came from a database in of one type or another in the first place?”

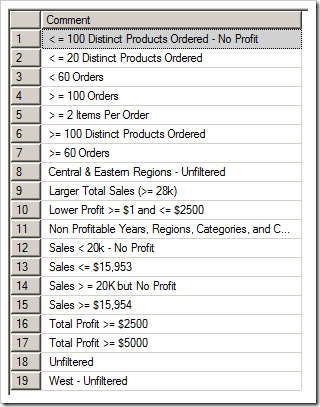

When we aggregate, manipulate, and filter data in Tableau, we create (hopefully interesting) sub-sets of the entire dataset. Of course, we can create and combine Sets in Tableau, and filter by them. This writer filtered the Superstore data in a variety of ways, and exported these views to SQL Server – updating the value of the Comment Calculation with each change of filtering. The list below shows the different filtering applied to the dataset (based on the value of the Comment Field):

Each of these filtering conditions could have been saved to a Tableau Set. That being true, saving the data returned by each filtering condition (also a Set), persisted in aggregated form in a database table enables a very fine grained view of the relationships between members of one or more of these Sets by filtering on the value of the Comment data field in a Tableau view. We can observe the various contexts in which one or more conditions exist – and “capture” these contexts within a “Super Set” created in Tableau.

An Example

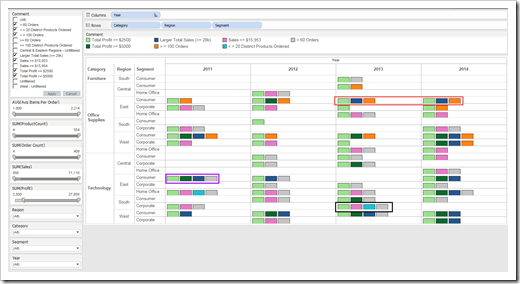

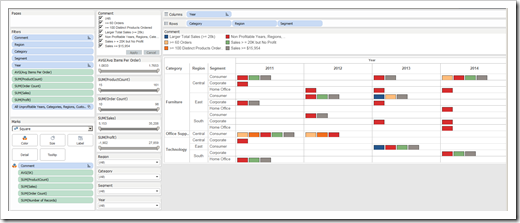

The Tableau view below looks at years, categories, regions, and customer segments where the total yearly profit was at least $2500 – which in relation to the total dataset would be considered “Well Performing Categories, Regions, and Customer Segments”.

The view surfaces some interesting situations:

- There are instances of good profitability in years, categories, regions, and customer segments where there were <60 orders, fewer than 20 distinct products ordered, and where total sales was below the yearly total dataset average per category, region, and year ($15,954). An example of this would be 2013 in the Technology category, in the South, within the Corporate Customer segment (see the black rectangles in the View above). Despite less than optimal business conditions, there was profit.

- If we look at the Office Supplies category in 2013 and 2014, in the East within the Consumer Customer segment, we see that despite very high sales (>$28k), a higher number of orders (>100), profit was between $2500 and $5000. We could have reasonably expected that total profit would have exceeded $5000 given the more favorable business conditions (see the red rectangle in the view above).

- If we look at the Technology category in 2011, in the East and Consumer Customer segment we see that there were high sales (>28K) and higher levels of profit (>$5000) with fewer than 60 orders (see the purple rectangle in the view above).

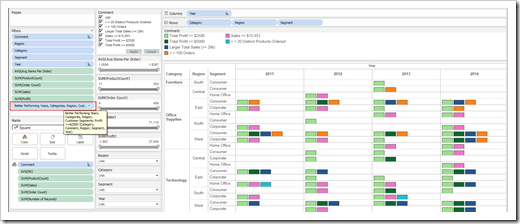

The data in this view could be saved as a Set within Tableau named “Better Performing Years, Categories, Regions, Customer Segments: Profit >=$2500”. I can reset all filtering of the view, and filter using the Set as shown below (see the red rectangle in the Filters Shelf):

This type of analysis can be enhanced by filtering the data further within Tableau as shown below:

This view helps surface two situations in 2013 within the Technology category within the Central region and Corporate Customer segment and Southern region within the Consumer Business segment (see black rectangles above) where higher levels of Profits were achieved (>= $4000) despite there being <60 orders. The lower end cut-off for Profit has been raised from $2500 to $4000 through filtering by Profit. The lighter green rectangles now represent Years, Categories, Regions, and Customer Segments where profit was at least $4000.

Whatever orders there were, they tended to higher profit.

A drilldown from this view could show us whether or not the products ordered in these Regions and Customer Segments differed from what was ordered in other Regions and Customer Segments within the Category in 2013.

We could then create a Set within Tableau, perhaps naming it “Profit >$4000 in Better Performing Years, Categories, Regions, and Segments”.

Below: the data is being filtered under the Specifications of a Set named “All Unprofitable Years, Categories, Regions, and Customer Segments”.

This View brings some interesting facts to light. There are instances of larger amounts of Product Sales (> than the all Dataset average of $15,954, > $20,000 and even > $28,000), as well as instances with >60 and >100 orders, and yet none of the Years, Categories, Regions, or Customer Segment turned a Profit.

The ability to observe the various contexts in which one or more conditions exist – and “capture” these contexts within a Tableau “Super Set” is in the opinion of the author, a useful Analytic Technique.

Please Note

Remember that:

- R is external to Tableau: the R platform must be downloaded and installed on your system or on a system your computer has permissions to connect to.

- Tableau cannot use R if the Rserve package is not loaded and running before starting Tableau.

- The R programming language uses libraries that are delivered in (documented) packages

- Packages that your Tableau Calculated Fields will use must be installed within your R environment.

- The library your Calculated Fields will use must be loaded within your R environment or by your Tableau Calculations (using a library[Library Name] statement).

- Some R libraries have dependencies on other R libraries.

- Some R libraries are trickier than others to load (example: rJava, zoo, etc.) You may need to change your system PATH statement to get some libraries to run (Control Panel -> System -> Advanced).

- Tableau Calculated Fields that leverage R are Table Calculations and therefore will most likely require customisation to function as expected.

Performance and Upper Limits

The Superstore dataset used by the author contains just under 10,000 rows of data. On the author’s system (64 bit Windows 7, 64 bit Tableau, 64 bit SQL Server, 16 GB system RAM, I7 processor) the Tableau calculations that run SQL commands, or write data to text files using the Superstore data source run instantaneously.

Obviously, there is an upper limit as to how many rows can be manipulated by a SQL statement “hosted” within a single Tableau Calculation. Before your SQL runs, Tableau does the heavy lifting to aggregate the measures and calculations in a view based on the active data source and filter the results accordingly.

If you have written INSERT statements, the aggregated rows in a Tableau “materialised” View are inserted into a database table, it’s a question of how many rows (<100?, >1000?, >1000,000? >1,000,000?) are to be inserted in your back end database given the capabilities of your system, the back end database, RODBC, and communications over port 6133 on your system. If you have written DDL queries that DROP tables in a back end database, and / or SELECT data in Tables in the back end database INTO other tables in your back end database, it’s a question of how quickly your back end database can execute these statements through an ODBC connection “hosted” by Tableau.

Ditto if you have written UPDATE queries that operate on tables in your back end database (and do not update these tables with any data from the current Tableau view). If you are updating rows in your back end data with some or all of the data in a Tableau view, there will be an upper limit as to how many rows you will be able to update within a “hosted” ODBC connection within a single or series of Tableau Calculations. If you want to push the envelope, you’ll need to experiment – the author makes no guess as to what will be possible on your system.

As Tableau does not give you the chance to recover unsaved work in the event of a crash, save your Tableau workbook files often.

Links on Using Tableau and R

The Download Links

Here are the files used for the example presented in this and the previous post:

The following text file should be read first and explains how to use the supplied resources:

This is the Tableau packaged workbook (zipped) showing the completed examples:

Download Tableau Read Write Tab 9.3 TWBX_Version zipped (924.3K)

The zipped SQL Server backup of the data required for the examples:

Download SQL Tableau Superstore Orders.zip (2519.7K)

The zipped Microsoft Access backup of the data required for the examples:

Download Access Tableau Superstore Orders.zip (2521.4K)

The Filtered Orders Query CSV file (required for one of the examples):

Download FilteredOrdersQuery.csv (28.4K)

The Orders Query CSV file (required for one of the examples):

Download OrdersQuery.csv (2251.1K)

Robert’s Note

Many thanks to Michael for this brilliant article and the amazing detailed and thorough step-by-step instructions. If you enjoyed Michael’s posts, please drop him a line in the comment section. Thank you.

Leave a Reply